Setting up Synapse On-Demand to serve data to Power BI XMLA processing

August 28, 2020

Querying Synapse SQL On-Demand is fairly straightforward, that is until you start straying outside the bounds of available tutorials. Documentation is still a bit spotty, and the you are not helped by the fact that two technologies (the solution formerly known as Azure SQL Data Warehouse and the new Synapse SQL-On Demand) both go under the moniker of Synapse Analytics.

Much of the information of the topic is too broad. Samples such as this one aims to show you how to query a data set in no less than three different ways, all at the same time. This can be confusing, and even to the people maintaining this information it can be difficult to keep it up to date. Here, therefore, I am going to focus on a very specific set of steps that focuses on solving a very specific problem.

How to query Databricks Delta Table in Azure Data Lake Gen 2 (ADLS) using a SQL-authenticated user to access Synapse SQL On-Demand (SSOD) with Power BI Premium XMLA, set up through Tabular Editor.

There are a couple of steps that need to be carried out in order for this to be possible:

- The Delta Table is assumed to already be in

ADLS. - A Shared Access Signature (

SAS) token needs to be generated inADLS. - In Synapse SQL: Create Credential.

- In Synapse SQL: Create Login.

- In Synapse SQL: Create User.

- In Synapse SQL: Grant access to Credential.

- In Synapse SQL: Create External Dataset.

- In Synapse SQL: Create View.

- In Tabular Editor: Create Legacy Data Source.

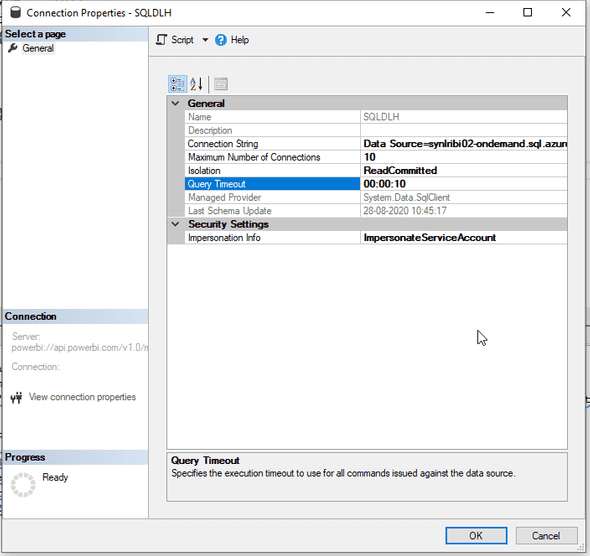

- In Tabular Editor: Check the timeout settings of the Legacy Data Source.

- In Tabular Editor: Get table.

- In Tabular Editor: Double check that the table uses the correct data source.

- In Sql Server Management Studio: Process Table.

The SAS token is used to authenticate across services. SSOD and ADLS are both Azure services but they embody two separate solutions. When you use SSOD in the Synapse Analytics interface, you are logged into Azure through your browser and the web session is able to share your identity between all the different services you use. Meanwhile, when connecting through an SQL-authenticated user identity, we don’t have the AD helping us, nor do we have the web session.

To get a SAS token, go here and generate one. You can also navigate to the page by going to Azure Portal, opening your storage account and finding Shared access signature in the pane on the left. You can choose among a number of settings for your token. I went and added: Service, Container, Object.

Once you have generated your token, copy it to notepad or similar. Obviously, you should only save this in a secure location, so don’t go writing it on post-its and whatnot. Keep it handy for now though, we’ll use it shortly.

Now we need to set up our objects on SSOD. So head to the Synapse Analytics SQL Query interface or log onto your Synapse endpoint through SSMS.

If you don’t know the url of your endpoint, go to the Azure Portal, find your Synapse Workspace and open the Properties pane. You are looking for the field named SQL on-demand endpoint. It should look like this:

SYNAPSEWORKSPACENAME-ondemand.sql.azuresynapse.net

Creating a Credential

Once in your SQL On-Demand interface, set your active database to where your source data is located (it might be pointing to master at first which wont work), create a Credential by running the following query, substituting YOURSASTOKEN with your SAS Token.

IF EXISTS (

SELECT * FROM sys.database_scoped_credentials

WHERE name = 'sqlondemand'

)

DROP DATABASE SCOPED CREDENTIAL [sqlondemand]

GO

CREATE DATABASE SCOPED CREDENTIAL [sqlondemand]

WITH IDENTITY='SHARED ACCESS SIGNATURE',

SECRET = YOURSASTOKEN

GONotice that the IDENTITY is set to SHARED ACCESS SIGNATURE. Don’t change this. Even if it seems to be just a string, it is needed to perform the authentication.

Also notice that our credential is DATABASE SCOPED. This means that it is only usable in the specific database in which it is created — not in the rest of the databases across the server.

Create a Data Source

In order to link up our queries to the data in ADLS, we need to define the location as a data source. The EXTERNAL key word means just that: The data we are referring to is located in ADLS, not in Synapse. Here, we name the data source ADLSStorage, and we point it to the storage account. Of course, this is the definition of our link to the data, so we supply our credential in order to be able to control who uses this link — only users which are allowed control of the CREDENTIAL will get access to the data source and its contents. We’ll get back to this in the next step.

CREATE EXTERNAL DATA SOURCE ADLSStorage WITH (

LOCATION = 'https://YOURSTORAGEACCOUNTNAME.blob.core.windows.net',

CREDENTIAL = sqlondemand

);Creating Authentication objects

We need to create a USER object and a LOGIN for said user. Or, as it turns out, the other way around. Once we have created both, we grand control of the CREDENTIAL to the USER. Often people will name the USER and the LOGIN the same thing. You can do that, I have avoided doing so for clarity’s sake. Finally, we add the role of db_owner to the USER. This might be overkill, but we can discuss security some other day.

CREATE LOGIN my_login WITH PASSWORD = '%!431*$21d-*';

CREATE USER my_user FROM LOGIN my_login;

GRANT CONTROL ON DATABASE SCOPED CREDENTIAL::sqlondemand TO [my_user]

ALTER ROLE db_owner ADD MEMBER [my_user]Creating a View

Now that the CREDENTIAL is set and the DATA SOURCE has been defined, we can start looking at some actual data queries. Synapse uses a command called OPENROWSET to access external data while interpreting them as database tables. Defining a view on top of this is meant to make handling the data feel more natural. We can lock down all the formalities such as file paths and typing in the view, and when later we go and query the view, we barely even notice that what we are looking at is basically a bunch of flat files.

In ADLS the path to our delta lake looks like this:

https://YOURSTORAGEACCOUNTNAME.blob.core.windows.net/CONTAINER-NAME/path/to/delta/table-name.parquet/.

It is worth noting that the delta table (table-name.parquet) is in itself implemented as a directory which contain a nested set of actual parquet files.

We define the VIEW as a SELECT FROM OPENROWSET, rounding it off with a WITH clause for defining the types.

CREATE VIEW [dbo].[sales_demo_view] AS

(

SELECT *

FROM OPENROWSET(

BULK '/CONTAINER-NAME/path/to/delta/table-name.parquet/',

DATA_SOURCE = 'ADLSStorage',

FORMAT='PARQUET'

)

WITH (

[id] BIGINT,

[customer_no] INT,

[sales_date] DATETIME2,

[sales_person_no] INT,

[item_no] VARCHAR(100),

[amount] DECIMAL(38,20),

) as [r]

)

GOTesting

Next, simply go ahead and test the view:

SELECT TOP 10 * FROM [sales_demo_view]Using in tabular editor

We need to do two things in Tabular Editor. Assuming that a Power BI Premium tenant exists and that a data model has been deployed to a workspace, we need to:

- Create a new Legacy Data Source.

- Write a query that runs against our Synapse On-Demand table.

For the data source, we use an ordinary SQL Server connection, and we authenticate with the SQL Server login my_login that we defined above. This step should be straightforward. If things are getting difficult, it is probably an issue on the Synapse side.

After the data source is created, right-click on it to get to the get tables dialogue box. This too is really easy, if everything is set up correctly.

Once the new table appears in the interface, save the model back to the Power BI service and go to SSMS to process the table.

Pitfalls

I’ve received quite a few errors while working on this. Here are a couple of the more interesting ones.

Failed to save modifications to the server. Error returned: '{"error":{"code":"DM_GWPipeline_Gateway_InvalidObjectNameException","pbi.error":{"code":"DM_GWPipeline_Gateway_InvalidObjectNameException","parameters":{},"details":[],"exceptionCulprit":1}}}

Technical Details:

RootActivityId: 1e7aa5c0-f493-4f52-8396-e1ca7d1b8d6f

Date (UTC): 8/27/2020 3:00:56 PM

'.This means SSAS does not recognize the Table name. I had a bad data source that Tabular Editor insisted on activating on my table. Reverting this manually solved the problem.

{"error":{"code":"DM_GWPipeline_Gateway_TimeoutException","pbi.error":{"code":"DM_GWPipeline_Gateway_TimeoutException","parameters":{},"details":[],"exceptionCulprit":1}}}This means that the query timeout has run out. You need to change this in the Legacy Data Source itself, as Tabular Editor seem to default to ten seconds.

Sources: